Du Bois on Da Vinci

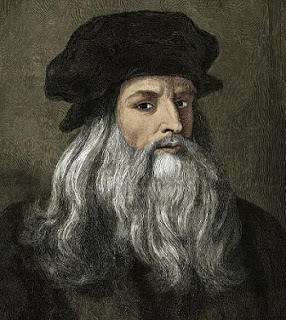

A quick write up on a charming essay by the young Du Bois (from his time as a graduate student at Harvard), which I only found out about through the fascinating historical work of Trevor Pearce . The essay is entitled Leonardo Da Vinci As A Scientist and is available online here . Leonardo Da Vinci -- ``I was even a pioneer in side-eye and general shade throwing.'' Du Bois is concerned to argue that Da Vinci deserves credit as the founder of modern experimental science. The argument strategy is twofold. First, to show that Da Vinci has sufficient (and sufficiently impressive) scientific achievements to merit attention as an early scientist at all. This Du Bois achieves by just reviewing historians (apparently then - 1889 - relatively recent) reappraisal of Da Vinci's empirical work and work inventing scientific machinery and to show that it was indeed impressive. This in itself was interesting; so for instance I learned here that Da Vinci was already floating the ide